Understanding why Large Language Models (LLMs) work is essential, but often obscured by technical explanation. This simple, non-technical guide aims to explain the foundational principles of their effectiveness. This understanding does not require delving into technical details or complex mathematics upon which LLMs are built. While the technical implementations are constantly evolving, the essence of this transformative technology remains constant and core to our foundational understanding.

LLMs are a groundbreaking technology, the biggest since arguably the internet and smartphones. It is important for the broader public to grasp the underpinnings of their effectiveness, as the technology is transformational. Yet, the very lack of a deeper understanding leads to widespread misconceptions and prevalent apprehensions, from claims of ineffectiveness to claims they’re Artificial General Intelligence (AGI) that will take over humanity. After this explanation, the hope is that you find neither of those claims reasonable.

What are they?

LLMs are “just” next word / token (tokens can be thought of as partial words) prediction machines.

More generally, LLMs are “just” pattern matching machines.

LLMs demonstrate the extremes of what pattern matching machines are capable of.

One realization is that many human jobs are 'just' pattern matching exercises. This is why it’s so important that most people should understand LLMs.

How do they work?

The technical explanation of how LLMs work have been done well by many others, so I will not cover it. If you’re interested, I highly recommend this series by Youtuber Three Blue One Brown:

A Simple Example: Memorization

Here’s our first example to build our understanding of what LLMs are doing.

Finish this sentence by filling in the blanks:

“To be or not <blank1> <blank2>.”

The answer is: “to be”, completing the first line of Shakespeare’s most famous soliloquy from Hamlet.

By being able to answer this question correctly, there are already a few things we know about answerer, whether it’s human or machine:

They must have had exposure to Shakespeare.

They have the ability to look at all the preceding words, and not just the last word, “not”.

They find the phrase to be notable and/or important, so that they are able to complete this sentence via free recall.

The start of the sentence (“To be or not”) must be sufficiently unique start to them.

“To be or not to be” is an example of the simplest pattern, a pattern with a single correct answer. These simple patterns are simply memorization. Another example of memorization-type patterns revolves around facts, e.g." “The capital of Japan is Tokyo”. Let's look at more complex patterns.

A Simple Pattern

Let’s look at things where there’s not a single right answer, but a number of plausible answers.

Finish this sentence by filling in the blank:

“Calvin’s favorite tropical fruit to eat is <blank>.”

Unlike the previous example, there are many possible answers to the sentence. An important place to start before exploring possible answers is to look at invalid answers:

“rocks” is not valid because it’s not edible.

“milk” is not valid because it's not a fruit.

“blueberry” is not valid because it’s not a tropical fruit.

“bananas” are not correct because it’s not grammatically correct

“orenge” or “sldkfjsdklgha3u” are not valid because they’re not words.

“very” is not valid because it is not syntactically correct.

The pattern of grammar, syntax, and spelling

The last three examples highlight one of the early accomplishments of LLMs, which is to be able to generate grammatically and syntactically correct sentences with proper spelling. Earlier models might have said a lot of nonsensical things, but they said grammatically and syntactically correct nonsensical things with proper spelling. A very important part of language levels are learning how to form correct sentences. While it's possible to enumerate all the rules of the English language, what is done by most people and also by language models, is to apply patterns (e.g. sometimes singular subject is followed by a verb the ends in s) while also memorizing exceptions instead of checking all enumerated rules for each word in their sentence.

Implicit truths

In the space of possible answers, there's a whole spectrum of likely and unlikely answers. There are some really important and interesting features in the unlikely yet possible answers:

“ambrosia” is unlikely because it’s a fictional fruit from mythology, and there’s no context suggesting that Calvin is in a fictional universe, that Calvin is looking for something fictional, or that Calvin is capable of enjoying eating fictional fruits.

“manchineel” is unlikely because it’s highly toxic to humans, and there’s no context suggesting that Calvin is not human.

“orange” is an unlikely answer because there’s widespread misconception that it’s a tropical fruit, when it’s actually subtropical. It’s technically wrong, but colloquially not.

“avocado” is unlikely because most people don’t consider them to be a fruit, even though they’re technically a fruit.

“soursop” is an unlikely answer because it’s rare and most people don’t know of the fruit (even though it’s actually my favorite fruit).

The first two examples implicit assumptions in the sentence completion task. The latter highlights, statistically unlikely answers, based on “common knowledge” of the population of English speakers. These examples are technically correct as they are all tropical fruits, but unlikely in different ways.

What is the real answer?

To determine the best answers to “Calvin’s favorite tropical fruit to eat is <blank>”, we could conduct a survey. We would ask people named Calvin about their favorite tropical fruit, and compile the answers. The most frequently chosen fruits would provide the possible real answers to our question.

For instance, if we surveyed 100 Calvins and found that 40 of them prefer bananas, 25 mangoes, 15 pineapples, 12 papayas, and 8 coconuts, these fruits would form our list of top answers, ranked by popularity. This method doesn't tell us why these fruits are preferred or their exact percentages among all Calvins worldwide, but it gives us a good snapshot:

“banana” - 40%

“mango” - 25%

“pineapple” - 15%

“papaya” - 12%

“coconut” - 8%

This approach of collecting and analyzing responses helps illustrate how LLMs process language. They evaluate vast amounts of data and predict the most likely next word or phrase based on patterns they have observed in the data they were trained on, similar to how we've determined the favorite fruits from our survey results.

To fill in the blank of our question, LLMs would sample from the distributions of possible answers, and pick one at random based on the weighting of each word (i.e. “banana” - 40% would more likely be chosen than “papaya” - 12%).

Inverting the Question

To begin appreciating the nuance and complexity of LLMs, it’s worth asking the inverse question to “Calvin’s favorite tropical fruit to eat is <blank>”, which is to find the all questions that share the same distribution of possible answers: 1) “banana” 2) “mango” 3) “pineapple” 4) “papaya”, 5) “coconut”.

What are questions that yield the same distribution of responses to:

“Calvin’s favorite tropical fruit to eat is <blank>.”

Here are some questions that is highly likely to have the same distribution:

“Calvin’s favorite fruit from tropical areas to eat is <blank>.”

“The tropical fruit that is Calvin’s favorite to eat is <blank>.”

“Calvin’s number 1 tropical fruit that he eats is <blank>.”

“Calvin’s fruit from regions of the world near the equator that he enjoys eating the most is <blank>.”

Here are versions where the <blank> is not at the end, which is valid:

“<blank> is Calvin’s favorite tropical fruit to eat.”

“Calvin favorite tropical fruit to eat is <blank>.”

LLMs (and you) understanding of the essence of the question

Any system that is capable of providing the same distributions to all the variations of the same question demonstrates the ability to capture the core essence of the sentence. LLMs are such a system, ignoring some of the randomness inherent in the technical implementation details.

By the definition of “to understand”, such a system must understand the sentence in order to reliably and predictably produce the same distribution of possible answers under all variations that have the same inherent meaning.

Analyzing similar distributions

Let’s explore questions where the distributions would be similar, but probably not the same.

“Calvin’s favorite fruit is <blank>.”

There are non-tropical fruits that will enter the distribution, e.g. “blueberries”.

“Jonathan’s favorite tropical fruit to eat is <blank>.”

There might be some correlation with names and favorite tropical fruit to eat.

“Catherine’s favorite tropical fruit to eat is <blank>.”

There might be a gender correlation, which is more likely than name-only.

“Kiriyama’s favorite tropical tropical fruit to eat is <blank>.”

There’s definitely a regional correlation with those names, and there’s regional correlations with food preferences.

“Calvin’s favorite fruit to eat is <blank>, which is a tropical fruit.”

“Calvin’s favorite food to eat is <blank>, which is a tropical fruit.”

This is a bit nuanced, as the distributions of Calvin’s whose favorite food/fruit happens to be a tropical fruit might be different than only looking at the favorite tropical fruit of Calvin’s.

Analyzing these subtle differences demonstrates that successful LLMs must encapsulate these observed statistical nuanced of the real-world into their next word / token prediction capabilities.

Longer responses

Longer responses: Memorization

Let’s return to the Shakespeare example. At each step, we will ask the LLM to only generate the next word (let’s simplify and ignore tokens):

Finish the sentence “To be or not”:

“To be or not” → “ to”

“To be or not to” → “ be”

“To be or not to be” → “, that”

“To be or not to be, that” → “ is”

“To be or not to be, that is” → “ the”

“To be or not to be, that is the” → “ question”

“To be or not to be, that is the question” → “.”

“To be or not to be, that is the question.”

Each step is generating the next word, but given the constraints of the input (in this memorization) task, there is only one right answer, so repeated applications will produce the rest of Shakespeare’s Hamlet if the LLM decides that it is important enough and unique enough (we will discuss this in the next section of Memorization → Generalization).

Longer responses: Simple Pattern

Let’s return to discussing my favorite tropical fruit. Similar to before, we will ask the LLM to only generate the next word, but since it’s not a notable literature like Shakespeare, the generations will have more randomness to it.

Finish the sentence “Calvin’s favorite tropical fruit is”:

“Calvin’s favorite tropical fruit is”

→ 1. “banana” 2. “mango” 3. “pineapple”

“Calvin’s favorite tropical fruit is pineapple”

→ 1. “!” 2. “ , despite” 3. “ , despite”

“… tropical fruit is pineapple, despite”

→ 1. “ their” 2. “ how” 3. “ his”

“… tropical fruit is pineapple, despite their”

→ 1. “ spikiness” 2. “ roughness” 3. “ appearance”

“… tropical fruit is pineapple, despite their spikiness”

→ 1. “.” 2. “!” 3. “, showcasing”

“Calvin's favorite tropical fruit is pineapple, despite their spikiness!”

At each stage of generating the next word (token), the system produces a probability distribution of the top possible candidates, and then the system selects one at random, with the top choices being more likely to be selected than the non-top choices. The system can be configured to have no randomness, and only pick the top option, or pick all the possible candidates in complete randomness. It’s been observed that somewhere in the middle has the best outcome.

Memorization→Generalization, Compression, and Long Context

Memorization → Generalization

A system whose goals are to produce next token prediction will likely naïvely memorize the solution.

Provided with enough data, the system is forced to generalize, and if executed well with high quality data, they may do it well.

An observation with building LLMs is that they go through the memorization to generalization transition at some critical amount of data. While some tasks still require memorization, e.g. notable quotes and facts, the generalization of information is how LLMs learn to understand through complex patterns.

Generalization as compression

The generalization is what is often referred to as compression of real world information. The explicit goal of a lot of prior research in this space was around how to minimally store the rich information found throughout the world.

Why do we need such large models?

A system’s ability to produce complex patterns depends largely on its size. There are two core measures of its size, the first is the size of the model, and the second is the amount of data fed into it.

For system size:

A small system is incapable of encapsulate many patterns or complex patterns.

A large system is capable of encapsulating many complex patterns.

An arbitrarily large system is capable of encapsulating patterns of arbitrary complexity.

For data size:

A system with very little data is able to learn very little

A system with a lot of data, but poor quality data or data with not a lot of information (e.g. repetitive), also learns very little

A system with diverse, high quality, and information rich data learns a lot

The empirical observations are that these systems exhibit power-law scaling with respect to increases in system size, data size, and compute resources, which has fueled the race among tech giants and well-funded startups to spend billions of dollars building bigger and more powerful models. The training cost for just OpenAI’s ChatGPT4 exceeds $100 million.

Conceptually Simple: Long Inputs

Long inputs only require that the LLM be set up to accept the longer inputs, a.k.a. Context Length. In the current implementation, there is a limit to how long they can be, but the limits are getting really large (Google’s Gemini’s acceptable context lengths exceeds the length of all the Harry Potter books.

Mixing Patterns

What happens when you combine multiple different next-token and join the distributions to produce the next token / word task?

The result is that they produce surprisingly good results of the intersection between multiple patterns (each of the concepts bolded for emphasis).

Prompt: “Write a metered sonnet about Materials Science in the style of Shakespeare”

Upon the anvil of the world so vast,

In forge of flame and intellect combined,

The craft of Materials Science cast,

A realm where art and physics are entwined.With metals, polymers, ceramics bright,

Each substance shaped by hands both firm and wise,

Their structures morph from darkness into light,

Beneath the keen Materials Scientist's eyes.In lattice bound, the atoms dance and play,

Their secrets told in whispers soft and clear,

From carbon's bond to silicon's array,

The elements their mysteries unveil.So let us hail this noble, silent quest,

Where through Materials Science, we're truly blessed.

This is a simplification of what actually happens within LLMs, but the intuition is the same: LLMs are extremely adept at blending multitudes of patterns in novel ways.

Bias in LLMs

LLMs are simply learning patterns in the data provided to the system. There’s an old saying in machine learning, which is “Garbage In, Garbage Out”. If the training data is garbage, then the output will also be garbage. Similarly, there’s “racism in, racism out”, in that if you train the LLMs with racist content, then the produced sentences will be racist.

It’s extremely difficult to tease out the subtle forms of discrimination that statistically exists in the dataset. Without going into it too deeply, this is an area rife with issues of Statistical Discrimination (wikipedia).

Prompt Sensitivity

Different models, e.g. ChatGPT, Gemini, Claude, etc. are sensitive to how you “talk” to it (a.k.a. prompting) because each of the models are trained and tuned with different data sources that have gone through various custom refinements to specific styles. This is done on the model’s part to ensure more consistent training and quality of the model, and therefore requires users to “talk” / interact to them in different manners.

“show your work” & “tipping”

It’s been thoroughly demonstrated that asking LLMs to “show your work” or “offer tips” improves model performance. Is it because these models are sentient?

No.

It’s just a reflection of the training data. It’s trained off of human generated information, and higher quality responses are associated with questions asking for thoroughness (“show your work”) or provide extrinsic motivation (“tipping”). The models goals are to be able to predict and match the behavior form its training data, which is all human psychology.

Prompt Engineering a.k.a. High EQ Engineers

In order to be adept at working with these language models, the users need high EQ, because the systems are built to reflect human emotions, thinking, and reasoning. Fundamentally, the way to interact with these systems require empathy for how humans would respond, and therefore requires skillsets often unassociated with engineers (though great engineers have always required great EQ).

I understand that a profession that requires primarily writing paragraphs of English is a weird way to think about “engineering”, but I think the title is appropriate given that they are experts on working with these systems, similar to how a python programmer is proficient in writing in a way that makes the most sense to the python programming language.

LLMs understand, but do not reason

Caveat: This section addresses the limitation of LLMs, but these limitations are reflective of the current technology, not necessarily the limitations of all future language models; after all, humans are also language models (and many other things as well).

The patterns that LLMs are able to encapsulate and emulate are extremely complex, and an arbitrarily large and well trained system will be able to emulate arbitrarily large and complex and patterns. We have established this to be a form of understanding. However, there are really important limitations to the technology that we need to understand, namely that LLMs cannot perform computation, or analogously, LLMs do not reason.

Pattern Matching / Understanding: Chess ✅

LLMs are capable of learning the complex space of chess because it has been given enough data to learn about the structure of chess, what are valid moves, similar to how LLMs have learned about the complex structure of English grammar (and what are valid words at which time). It also helps that there is so much content related to chess that the language model can learn from.

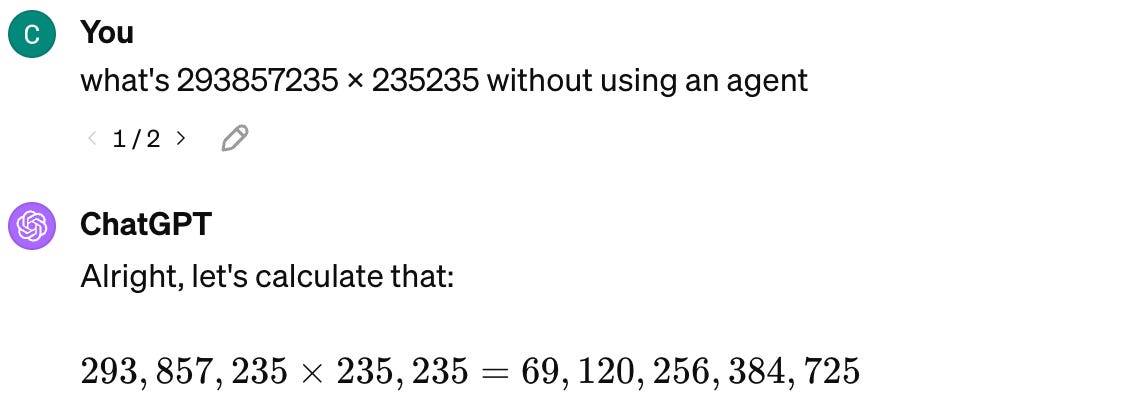

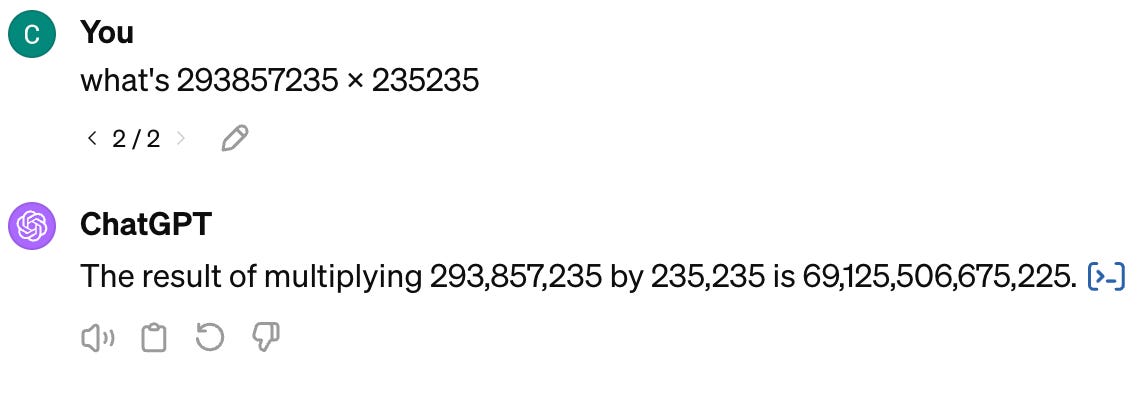

Computation / Reasoning: Multiplication 👎

What is easier than chess to most, but something LLMs cannot do, is long multiplication. Short and simple multiplication (6 x 7 = 42) is something that LLMs can memorize, and there are simpler patterns that it can learn. Long multiplication is a domain where the data is so dense that it’s impossible for a finitely sized system to memorize all the permutations. The only efficient methods are to implement one of the many multiplication algorithms, which has complex recurring structures, which naïvely LLMs cannot handle.

In the illustrations, the LLMs were able to predict that the result is a number, the right number of digits, the fact that the number starts with 69, and ends in a 5, but struggled to come up with the right answer.

Tool Use

LLMs can be taught that certain things are best allocated to another process. This is called tool use by LLMs, and are a way that LLMs can expand their capabilities beyond their limitations. This is not general computation because each of these use cases need to be specifically trained, and the tools themselves are not general reasoning tools.

In theory, LLMs can compute, but…

In theory, LLMs alone could do computations when chained, i.e. asking it to carry out each step of the algorithm as a separate computation (with this, you can prove LLMs are Turing Complete). This method of computation is so prohibitively expensive that it’s practically not done for general computation, but are being explored by researchers in seeing the limits of reasoning by LLMs in solving advanced math, scientific, and even legal / medical problems.

AGI? Not yet.

In my opinion, Artificial General Intelligence (AGI) requires the ability to do general computation and follow algorithms. The lack of such an ability in the current iteration of the LLM technologies means that they fall short of that definition.

I think this is an awesome foundation on which we could achieve AGI, and it feels tantalizingly close and achievable. There’s just a few more large leaps we have to achieve to get there.

LLMs seem magical

In some sense, LLMs are magical, and their rapid development has surprised even the most optimistic machine learning researchers. While the future is always difficult to predict, it’ll definitely be exciting and perhaps we’ll have a breakthrough that creates Artificial General Intelligence. Until then, LLMs remain only a powerful tool for humans, which very likely will displace some jobs while significantly uplevel-ing the productivity of others.

I hope you’ve enjoyed this essay on why LLMs work. Feel free to leave a comment or email me your questions or concerns at wave@cal.vin!